Benchmarking programs is easy; but benchmarking programs in a reproducible and deterministic manner is very hard. It is important, because we want to compare the performance of different models on the same set of programs irrespective of a reserarcher's server configuration. Moreover, you can even wind up in scenarios where you can benchmark the same exact program and accidentally believe one is much faster than the other.

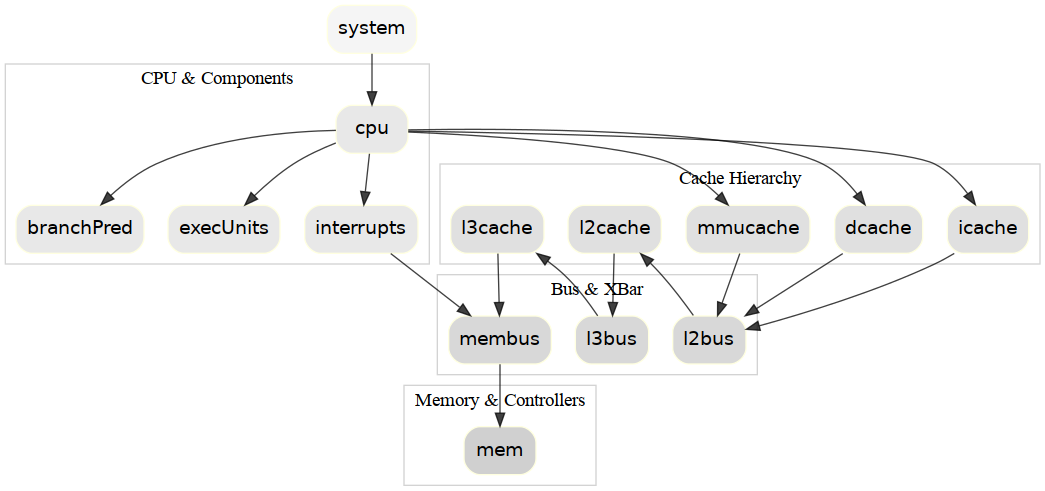

We built a custom evaluation framework that benchmarks program execution time in a highly-reliable manner. We built an execution sandbox based on the gem5 simulator and is fully deterministic modulo program termination. For our experiments, we use a simulated CPU of the Intel Skylake CPU. We provide an easy-to-use docker image and API that can be used to reproduce our results and for other researchers to continue to use for program optimization research.

We investigate numerous ways to improve the performance of LLMs for program optimization. Our best public access model is a fine-tuned version of CodeLlama (13B parameters) that we fine-tuned on our dataset with performance-conditioning. This model outperforms standard GPT-3.5 Turbo and GPT-4 with standard prompting strategies. We also were able to fine-tune a version of GPT-3.5 Turbo using our dataset of program optimizations and self-play (using programs and optimizations both sampled from an LLM) to obtain a model that is even stronger.

| Model + Configuration | %Optimized | Agg. Speedup | %Correct |

|---|---|---|---|

| Average Human | 100% | 3.66 | 100% |

| Best of all Humans in the Dataset | 100% | 9.56 | 100% |

| GPT3.5 With Chain of Thought Prompts, Best@1 | 21.37% | 1.25 | 65.95% |

| GPT4 With Chain-of-Thought Prompts, Best@1 | 26.99% | 1.32 | 63.09% |

| GPT3.5 With Retrieval-based-Prompting, Best@1 | 28.02% | 1.55 | 79.65% |

| GPT4 With Retrieval-based-Prompting, Best@1 | 51.02% | 2.53 | 79.35% |

| Fine-Tuned CodeLlama 13B w/ Perf-Conditioning, Best@1 | 32.00% | 2.95 | 38.55% |

| Fine-Tuned Gpt3.5 w/Self-Play, Best@1 | 45.50% | 3.02 | 61.55% |

| Fine-Tuned CodeLlama 13B w/ Perf-Conditioning, Best@8 | 66.56% | 5.65 | 70.96% |

| Fine-Tuned Gpt3.5 w/Self-Play, Best@8 | 87.63% | 6.86 | 95.09% |

| Fine-Tuned Gpt3.5 w/Self-Play, Best over all Submissions, 40 samples Per Problem | 99.80% | 9.64 | 99.90% |

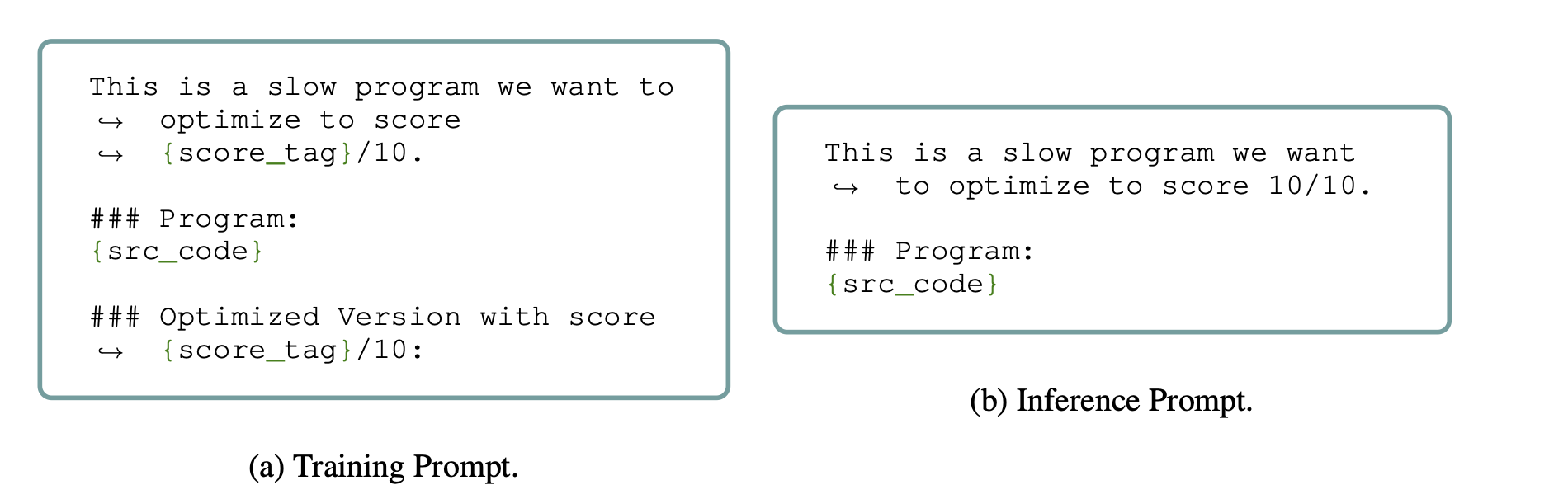

Programs can typically be written in many ways with different performance profiles. When training a model to predict performance-improving edits with a large dataset, it may be trained on a mix of large and small improvements, without any information on which improvements are more desirable than others. We introduce performance tags during training by associating each “fast” program with a tag indicating the optimal achievable performance across all solutions in the dataset.

Specifically, the tag indicates how close that program is to peak performance on a binned-scale {1, 2, . . . , 10}. Then at test time, we prompt the model with a test input and a maximal score tag “10/10”, directing it to generate a highly-optimized solution.

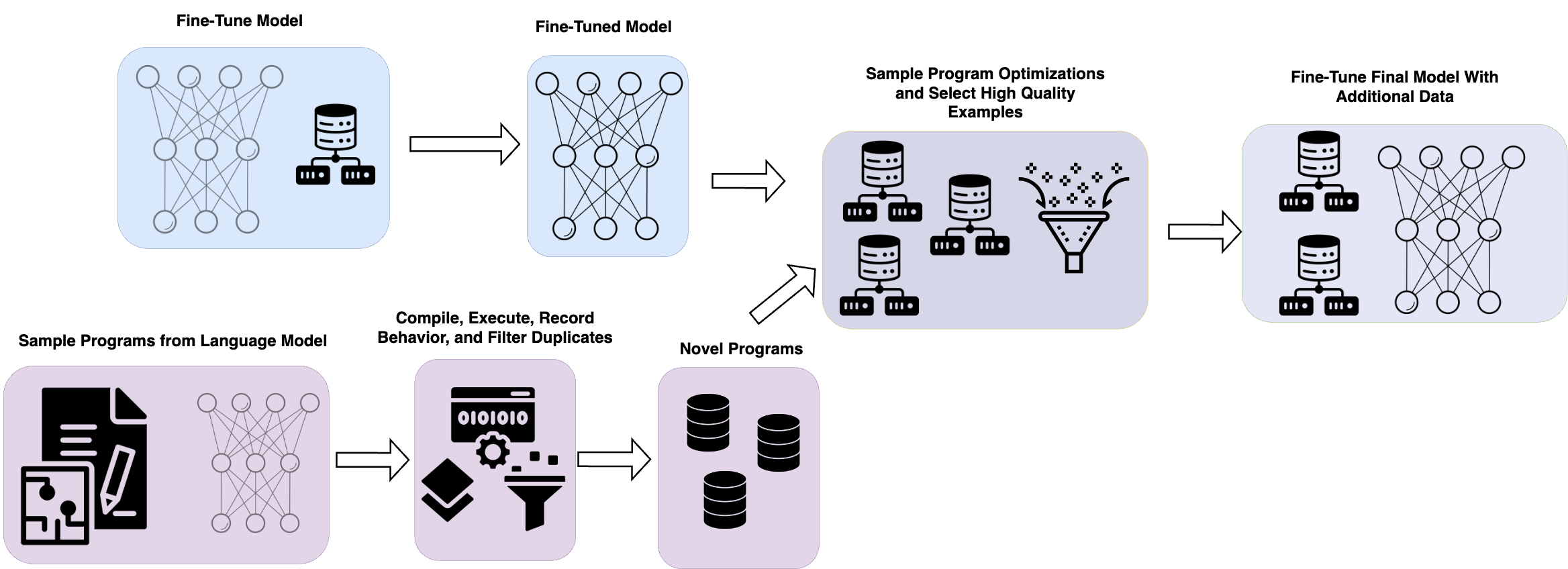

In an attempt to boost the performance of our models, we also investigate the use of self-play for program optimization as a data augmentation technique. Because there is a limited set of programs in our dataset, we use an off-the-shelf language model to generate new programs and a high-quality fine-tuned model to generate new optimizations. After taking some rigorous steps to ensure the generated programs are semantically novel and the optimizaitons are non-trivial, we use the generated programs and optimizations to create new program optimization pairs. The self-play notion comes from the fact that one model is used to generate the programs to solve and the other model is used to generate solve/propose the optimizations.

Our best model without self-play was with GPT3.5 turbo, our best fine-tuned model was trained with 4,085 high quality pairs. We were able to sample 3,314 novel programs and obtain 1,485 high-quality optimizations. Using these additional 1,485 optimizations helped improve the performance of our fine-tuned model. We also performed an ablation by adding 1,485 next-best programs from the PIE dataset for fine-tuning GPT3.5 turbo, but these pairs led to performance degradation.

@inproceedings{

pie_iclr_2024_spotlight,

title={Learning Performance-Improving Code Edits},

author={Alexander Shypula and Aman Madaan and Yimeng Zeng and Uri Alon and Jacob R. Gardner and Yiming Yang and Milad Hashemi and Graham Neubig and Parthasarathy Ranganathan and Osbert Bastani and Amir Yazdanbakhsh},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=ix7rLVHXyY}

}